By Ryan Tym, Director, Lantern

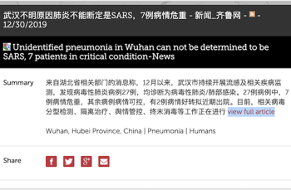

It was not a person who first sounded the alarm about COVID-19. It was artificial intelligence. HealthMap, a website run by Boston Children’s Hospital, uses AI to scan social media, news reports, internet search queries, and so on for signs of disease outbreaks. On 30 December 2019, it issued a one-line email bulletin about a new type of pneumonia in Wuhan, China.

It’s an example of just how embedded in our lives AI already is. From your iPhone recognising your face, to Netflix predicting your next favourite boxset, AI is everywhere. It’s predicting crop problems, optimising grocery delivery routes, resolving customer support queries, managing the heating in your home, and so on. AI is already in almost every part of our lives.

Yet it has a trust problem. A 2019 study of 5,000 people around the world for cloud software firm Pega found only 30% feel comfortable with businesses using AI to interact with them. It’s easy to see why. From Rutger Hauer’s tears in the rain to Charlie Brooker’s dark musings in Black Mirror, AI has been consistently portrayed in popular culture as a threat.

Lost opportunities

This mistrust and fear has slowed and limited its adoption, and that is a major problem not only for those running AI businesses but for humanity as a whole. At the very topline Accenture research on the impact of AI in 12 developed economies has suggested that that AI could double annual economic growth rates in 2035.

On a more granular, visceral level this will cause very real problems. It’s the girl losing out on AI directing her learning most effectively. It’s the family whose home burns down because there was no AI-powered robotic emergency team.

It’s the old man on a hospital ward who could have had an AI surgeon operate on him. It’s all the people who in the midst of a pandemic can’t access the information about local services and policing that they need.

Right now there are AI firms taking solutions to the market. If they don’t find ways to build trust then not only will they struggle from a commercial point of view but society will suffer too. We need to find a way to communicate the benefits of AI, to give it a human face – we need to help people make friends with AI.

Making friends with AI

For most AI firms the core issue is that they focus too heavily on product at the expense of audience. Typically they are led by CTOs: brilliant technicians who are rightly proud of the product they have created and excited by its potential to transform our lives. Yet, trust is engendered by looking beyond product and connecting instead on an emotional, more human level.

In simple terms, people don’t care what 4G is; they do care that they can stream Game of Thrones on the bus to work. Likewise, classical music streaming service Primephonic could talk about its superior bit rate or it could celebrate the uplifting journey that classical music can take you on.

Chatbot Futr could talk about how it enables AI-powered conversations across messaging channels, or it could show us all the ways it enables better conversations, liberating us and making our lives better.

Sometimes it’s not a focus on technology that’s the issue, it’s highlighting the disruptive potential of the product. Disruption can be a great story to tell, but often people want reassurance, not disruption. The key is to focus on audience and what they want.

Alongside brand messaging, AI firms can use visual identity to build trust. They can look beyond the obvious futuristic iconography, imagery and colours to something softer, warmer, more human.

Opportunity and responsibility

Never before has humankind had the capacity to create such capable machines. Never before has it had as great a need for these machines, and we need to exploit their full potential. Healthmap spotted the outbreak, but too late. For the next virus will we have an AI system in place that spots it earlier and that we trust enough to take decisive, early action?

Those running AI firms have an opportunity and a responsibility. The opportunity is to find ways to build trust in their technology and products, so that people become more open to the possibilities AI offers. The responsibility is to seize that opportunity.